Blog Layout

Cyborg Analytics I

My last post argues that an awful lot of analytics, not only those produced by machine learning, require human interpretation.

In one sense this isn’t quite right – or at least this binary division between the bean counters and the field workers leaves a lot out. I know of several senior accountants who speak of when the books they are auditing just don’t ‘smell’ right. There is something about them which they can’t explain, but which forces them to look deeper to see where the discrepancies are, and what dark deeds against the Gods of fiscal rectitude are hidden. At the same time, field archaeology has been revolutionised by a whole suite of tools which make on-site accurate measurements far easier to take, improving stratigraphy, dating and assessment of provenance immeasurably.

This is hardly a new observation, of course. A while ago Johns Hopkins PHA tweeted a quotation from Jonathan Weiner, the cofounding director of the Johns Hopkins Center for Population Health IT, which arose during a discussion on data stewardship:

“I have never seen computer analytics do a better job than a combination of humans and computers," said Weiner. "As we develop the data, the human interactions, the human interfaces, are just as important – if not more – than advanced computer science techniques."

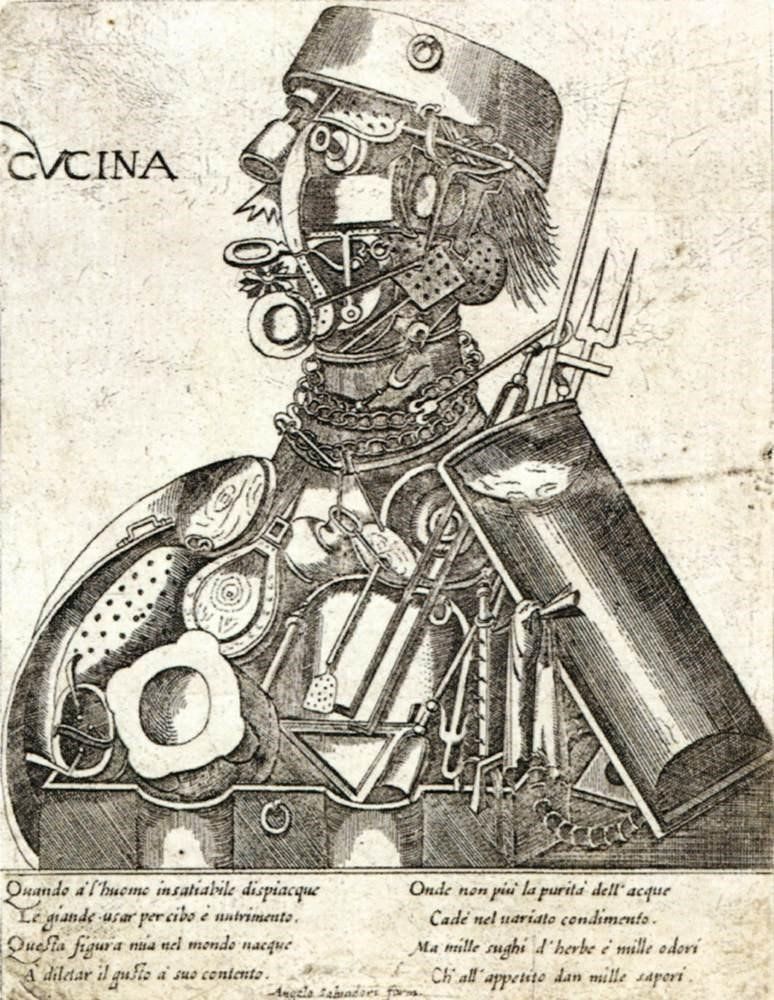

This combination of human and algorithm might seem at first to create a ‘cyborg analytics’ – not fully human, with an underlying burnished glint of metal, but close enough that its products have that whiff of the alien that places them firmly in the ‘uncanny valley’. That might actually be more off putting than the HAL-like blank-faced computer that makes decision that remain ultimately unexplained and unexplainable.

However, Weiner’s combination of humans and computers might be better thought of as a partnership, with each half of the relationship bringing something unique to the whole. And for that partnership to work, there must be an explanatory element in each and every algorithm – something which goes a measurable way to providing understanding about how any given result was arrived at. This is the core aspect of the ‘Wolves and Huskies’ example I discussed last time.

But what is it that the human analyst brings to the partnership that is so necessary? One answer might be something akin to ‘tacit knowledge’. I first came across this notion many years ago, at a retirement party for a field archaeologist, who had spent much of her working life with the British Museum. She explained how, when assessing certain types of finds, the trainee slowly acquired a sense of how to interpret them. The accumulated layers of expertise came about through exposure to many different objects, over time, and learning also from others’ views about them. She was profoundly dismissive of certain types of analytical techniques – ‘those of the “bean counters’” – which were loved by accountants and their ilk. For her, the knowledge needed by an expert professional in the field was something that was slightly evanescent, and which could only be developed through years of deep experience.

In one sense this isn’t quite right – or at least this binary division between the bean counters and the field workers leaves a lot out. I know of several senior accountants who speak of when the books they are auditing just don’t ‘smell’ right. There is something about them which they can’t explain, but which forces them to look deeper to see where the discrepancies are, and what dark deeds against the Gods of fiscal rectitude are hidden. At the same time, field archaeology has been revolutionised by a whole suite of tools which make on-site accurate measurements far easier to take, improving stratigraphy, dating and assessment of provenance immeasurably.

At the same time, this notion that one learns through doing – that an understanding of archaeological finds grows as one is exposed to more and more examples, and hears what other experts say about them – sounds suspiciously like many machine learning methods. Thus, expert radiographers, who have built their understanding of what an X-Ray is really showing through years of hard-won experience, can now be equalled, in part, by carefully trained algorithms looking at the self-same images. And there is no problem in recruitment when it comes to the algorithms. Some of the claims about tacit knowledge might begin to seem like special pleading. But it isn’t quite as simple as that; the AI assessments still need verification – and there are hard cases that are undecidable by the algorithmic tools. So human judgement is required, and that judgement is only arrived at through those years of experience.

Perhaps a better way to think about this is ‘domain knowledge’. Going back to our example of Wolves and Huskies, the human test subjects who were spotting how the computer had made its mistakes were accessing a wider, associated web of knowledge that included an understanding of weather, colour, and the habits of huskies and wolves. This wasn’t simply limited to the training data captured in the photographs. The human partner in Weiner’s unCyborgian combined analytics brings that wider knowledge of connections between many, many other facts.

One final thought: this has a direct bearing on recruitment. When building a team to build an AI tool, when solving a real-world problem, the domain experts must be seen as at least as important as the computer scientists who understand how the algorithms are best built, and how the tech is most efficiently exploited. Both are essential.

And on both sides, there is a requirement to speak the others’ language, to some degree at least.

Copyright ©2021 Graham Head. All rights reserved. Image backgrounds © 2021 The estate of Martin Herbert. All rights reserved.